In This Topic

Fit

Fitted values are also called fits or  . The fitted values are point estimates of the mean response for given values of the predictors. The values of the predictors are also called x-values.

. The fitted values are point estimates of the mean response for given values of the predictors. The values of the predictors are also called x-values.

Interpretation

Fitted values are calculated by entering the specific x-values for each observation in the data set into the model equation.

For example, if the equation is y = 5 + 10x, the fitted value for the x-value, 2, is 25 (25 = 5 + 10(2)).

Observations with fitted values that are very different from the observed value may be unusual. Observations with unusual predictor values may be influential. If Minitab determines that your data include unusual or influential values, your output includes the table of Fits and Diagnostics for Unusual Observations, which identifies these observations. The unusual observations that Minitab labels do not follow the proposed regression equation well. However, it is expected that you will have some unusual observations. For example, based on the criteria for large standardized residuals, you would expect roughly 5% of your observations to be flagged as having a large standardized residual. For more information on unusual values, go to Unusual observations.

SE Fit

The standard error of the fit (SE fit) estimates the variation in the estimated mean response for the specified variable settings. The calculation of the confidence interval for the mean response uses the standard error of the fit. Standard errors are always non-negative. The analysis calculates standard errors for models from the Stat menu and models from Linear Regression and Binary Logistic Regression from the Predictive Analytics Module.

Interpretation

Use the standard error of the fit to measure the precision of the estimate of the mean response. The smaller the standard error, the more precise the predicted mean response. For example, an analyst develops a model to predict delivery time. For one set of variable settings, the model predicts a mean delivery time of 3.80 days. The standard error of the fit for these settings is 0.08 days. For a second set of variable settings, the model produces the same mean delivery time with a standard error of the fit of 0.02 days. The analyst can be more confident that the mean delivery time for the second set of variable settings is close to 3.80 days.

With the fitted value, you can use the standard error of the fit to create a confidence interval for the mean response. For example, depending on the number of degrees of freedom, a 95% confidence interval extends approximately two standard errors above and below the predicted mean. For the delivery times, the 95% confidence interval for the predicted mean of 3.80 days when the standard error is 0.08 is (3.64, 3.96) days. You can be 95% confident that the population mean is within this range. When the standard error is 0.02, the 95% confidence interval is (3.76, 3.84) days. The confidence interval for the second set of variable settings is narrower because the standard error is smaller.

Confidence interval for fit (95% CI)

These confidence intervals (CI) are ranges of values that are likely to contain the mean response for the population that has the observed values of the predictors or factors in the model.

Because samples are random, two samples from a population are unlikely to yield identical confidence intervals. But, if you sample many times, a certain percentage of the resulting confidence intervals contain the unknown population parameter. The percentage of these confidence intervals that contain the parameter is the confidence level of the interval.

- Point estimate

- The point estimate is the estimate of the parameter that is calculated from the sample data. The confidence interval is centered around this value.

- Margin of error

- The margin of error defines the width of the confidence interval and is determined by the observed variability in the sample, the sample size, and the confidence level. To calculate the upper limit of the confidence interval, the error margin is added to the point estimate. To calculate the lower limit of the confidence interval, the error margin is subtracted from the point estimate.

Interpretation

Use the confidence interval to assess the estimate of the fitted value for the observed values of the variables.

For example, with a 95% confidence level, you can be 95% confident that the confidence interval contains the population mean for the specified values of the predictor variables or factors in the model. The confidence interval helps you assess the practical significance of your results. Use your specialized knowledge to determine whether the confidence interval includes values that have practical significance for your situation. A wide confidence interval indicates that you can be less confident about the mean of future values. If the interval is too wide to be useful, consider increasing your sample size.

Resid

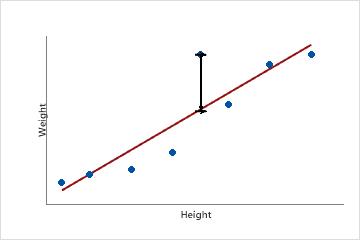

A residual (ei) is the difference between an observed value (y) and the corresponding fitted value, ( ), which is the value predicted by the model.

), which is the value predicted by the model.

This scatterplot displays the weight versus the height for a sample of adult males. The fitted regression line represents the relationship between height and weight. If the height equals 6 feet, the fitted value for weight is 190 pounds. If the actual weight is 200 pounds, the residual is 10.

Interpretation

Plot the residuals to determine whether your model is adequate and meets the assumptions of regression. Examining the residuals can provide useful information about how well the model fits the data. In general, the residuals should be randomly distributed with no obvious patterns and no unusual values. If Minitab determines that your data include unusual observations, it identifies those observations in the Fits and Diagnostics for Unusual Observations table in the output. The observations that Minitab labels as unusual do not follow the proposed regression equation well. However, it is expected that you will have some unusual observations. For example, based on the criteria for large residuals, you would expect roughly 5% of your observations to be flagged as having a large residual. For more information on unusual values, go to Unusual observations.

Std Resid

The standardized residual equals the value of a residual (ei) divided by an estimate of its standard deviation.

Interpretation

Use the standardized residuals to help you detect outliers. Standardized residuals greater than 2 and less than −2 are usually considered large. The Fits and Diagnostics for Unusual Observations table identifies these observations with an 'R'. The observations that Minitab labels do not follow the proposed regression equation well. However, it is expected that you will have some unusual observations. For example, based on the criteria for large standardized residuals, you would expect roughly 5% of your observations to be flagged as having a large standardized residual. For more information, go to Unusual observations.

Standardized residuals are useful because raw residuals might not be good indicators of outliers. The variance of each raw residual can differ by the x-values associated with it. This unequal variation causes it to be difficult to assess the magnitudes of the raw residuals. Standardizing the residuals solves this problem by converting the different variances to a common scale.

Del residuals

Each deleted Studentized residual is calculated with a formula that is equivalent to systematically removing each observation from the data set, estimating the regression equation, and determining how well the model predicts the removed observation. Each deleted Studentized residual is also standardized by dividing an observation's deleted residual by an estimate of its standard deviation. The observation is omitted to determine how the model behaves without this observation. If an observation has a large Studentized deleted residual (if its absolute value is greater than 2), it may be an outlier in your data.

Interpretation

Use the deleted Studentized residuals to detect outliers. Each observation is omitted to determine how well the model predicts the response when it is not included in the model fitting process. Deleted Studentized residuals greater than 2 or less than −2 are usually considered large. The observations that Minitab labels do not follow the proposed regression equation well. However, it is expected that you will have some unusual observations. For example, based on the criteria for large residuals, you would expect roughly 5% of your observations to be flagged as having a large residual. If the analysis reveals many unusual observations, the model likely does not adequately describe the relationship between the predictors and the response variable. For more information, go to Unusual observations.

Standardized and deleted residuals may be more useful than raw residuals in identifying outliers. They adjust for possible differences in the variance of the raw residuals due to different values of the predictors or factors.

Hi (leverage)

Hi, also known as leverage, measures the distance from an observation's x-value to the average of the x-values for all observations in a data set.

Interpretation

Hi values fall between 0 and 1. Minitab identifies observations with leverage values greater than 3p/n or 0.99, whichever is smaller, with an X in the Fits and Diagnostics for Unusual Observations table. In 3p/n, p is the number of coefficients in the model, and n is the number of observations. The observations that Minitab labels with an 'X' may be influential.

Influential observations have a disproportionate effect on the model and can produce misleading results. For example, the inclusion or exclusion of an influential point can change whether a coefficient is statistically significant or not. Influential observations can be leverage points, outliers, or both.

If you see an influential observation, determine whether the observation is a data-entry or measurement error. If the observation is neither a data-entry error nor a measurement error, determine how influential an observation is. First, fit the model with and without the observation. Then, compare the coefficients, p-values, R2, and other model information. If the model changes significantly when you remove the influential observation, examine the model further to determine if you have incorrectly specified the model. You may need to gather more data to resolve the issue.

Cook's distance (D)

Cook's distance (D) measures the effect that an observation has on the set of coefficients in a linear model. Cook's distance considers both the leverage value and the standardized residual of each observation to determine the observation's effect.

Interpretation

Observations with a large D may be considered influential. A commonly used criterion for a large D-value is when D is greater than the median of the F-distribution: F(0.5, p, n-p), where p is the number of model terms, including the constant, and n is the number of observations. Another way to examine the D-values is to compare them to one another using a graph, such as an individual value plot. Observations with large D-values relative to the others may be influential.

Influential observations have a disproportionate effect on the model and can produce misleading results. For example, the inclusion or exclusion of an influential point can change whether a coefficient is statistically significant or not. Influential observations can be leverage points, outliers, or both.

If you see an influential observation, determine whether the observation is a data-entry or measurement error. If the observation is neither a data-entry error nor a measurement error, determine how influential an observation is. First, fit the model with and without the observation. Then, compare the coefficients, p-values, R2, and other model information. If the model changes significantly when you remove the influential observation, examine the model further to determine if you have incorrectly specified the model. You may need to gather more data to resolve the issue.

DFITS

DFITS measures the effect each observation has on the fitted values in a linear model. DFITS represents approximately the number of standard deviations that the fitted value changes when each observation is removed from the data set and the model is refit.

Interpretation

| Term | Description |

|---|---|

| p | the number of model terms |

| n | the number of observations |

Influential observations have a disproportionate effect on the model and can produce misleading results. For example, the inclusion or exclusion of an influential point can change whether a coefficient is statistically significant or not. Influential observations can be leverage points, outliers, or both.

If you see an influential observation, determine whether the observation is a data-entry or measurement error. If the observation is neither a data-entry error nor a measurement error, determine how influential an observation is. First, fit the model with and without the observation. Then, compare the coefficients, p-values, R2, and other model information. If the model changes significantly when you remove the influential observation, examine the model further to determine if you have incorrectly specified the model. You may need to gather more data to resolve the issue.

Durbin-Watson statistic

Use the Durbin-Watson statistic to test for the presence of autocorrelation in the errors of a regression model. Autocorrelation means that the errors of adjacent observations are correlated. If the errors are correlated, then least-squares regression can underestimate the standard error of the coefficients. Underestimated standard errors can make your predictors seem to be significant when they are not.

Interpretation

The Durbin-Watson statistic determines whether or not the correlation between adjacent error terms is zero. To reach a conclusion from the test, you will need to compare the displayed statistic with lower and upper bounds in a table. To see a table for sample sizes up to 200 and up to 21 terms, go to Test for autocorrelation by using the Durbin-Watson statistic.

The Durbin-Watson statistic tests for first-order autocorrelation. To look for other time-order patterns, look at a plot of the residuals versus the order of the data.

Fits and diagnostics for a test data set

Interpretation

Generally, you use the fits and diagnostics from the test data set the same way that you use those statistics for the training data set. Examine the fits and confidence intervals to see the precision of the estimates. Examine the residuals to see the amount of error. Examine the unusual data points to see predictor values where the model might not fit well.

One difference is that unusual observations in the test set cannot have any effect on the estimation of the model. A point that has a high leverage instead indicates a place where the test data set represents an extrapolation relative to the training data set. Remember to use caution when you extrapolate from the region of data in the estimation of the model.

Minitab does not display deleted residuals, Cook's D, or DFITS for the test data set. Deleted residuals show how well the model predicts the response when an observation is not in the model fitting process. Cook's D measures the effect that an observation has on the set of coefficients in a linear model. DFITS measures the effect each observation has on the fitted values in a linear model. Because none of the observations in the test data set are in the model fitting process, none of these statistics have any interpretation for the test data set.