Note

This command is available with the Predictive Analytics Module. Click here for more information about how to activate the module.

A team of researchers collects data about factors that affect a quality characteristic of baked pretzels. Variables include process settings, like Mix Tool, and grain properties, like Flour Protein.

As part of the initial exploration of the data, the researchers decide to use Discover Key Predictors to compare models by sequentially removing unimportant predictors to identify key predictors. The researchers hope to identify key predictors that have large effects on the quality characteristic and to gain more insights into the relationships among the quality characteristic and the key predictors.

- Open the sample data, PretzelAcceptability.MWX.

- Choose .

- From the drop-down list, select Binary response.

- In Response, enter 'Acceptable Pretzel'.

- In Response event, select 1 to indicate that the pretzel is acceptable.

- In Continuous predictors, enter 'Flour Protein'-'Bulk Density'.

- In Categorical predictors, enter 'Mix Tool'-'Kiln Method'.

- Select Predictor Elimination.

- In Maximum number of elimination steps enter 29.

- Click OK in each dialog box.

Interpret the results

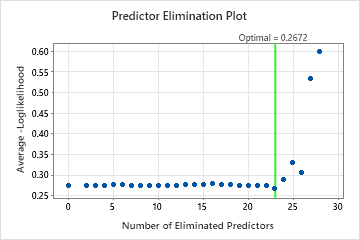

For this analysis, Minitab Statistical Software compares 28 models. The number of steps is less than the maximum number of steps because the Foam Stability predictor has an importance score of 0 in the first model, so the algorithm eliminates 2 variables in the first step. The asterisk in the Model column of the Model Evaluation table shows that the model with the smallest value of the average –loglikelihood statistic is model 23. The results that follow the model evaluation table are for model 23.

Although model 23 has the smallest value of the average –loglikelihood statistic, other models have similar values. The team can click Select Alternative Model to produce results for other models from the Model Evaluation table.

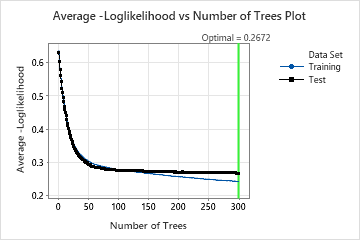

In the results for Model 23, the Average –Loglikelihood vs. Number of Trees Plot shows that the optimal number of trees is almost the number of trees in the analysis. The team can click Tune Hyperparameters to increase the number of trees and to see whether changes to other hyperparameters improve the performance of the model.

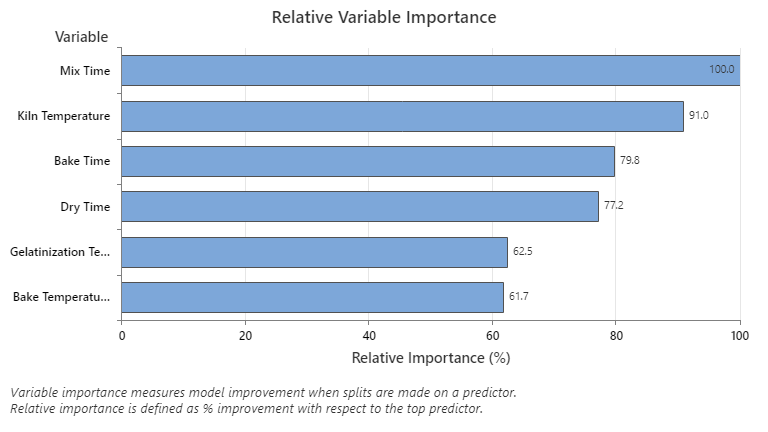

The Relative Variable Importance graph plots the predictors in order of their effect on model improvement when splits are made on a predictor over the sequence of trees. The most important predictor variable is Mix Time. If the importance of the top predictor variable, Mix Time, is 100%, then the next important variable, Kiln Temperature, has a contribution of 91.0%. This means that Kiln Temperature is 91.0% as important as Mix Time.

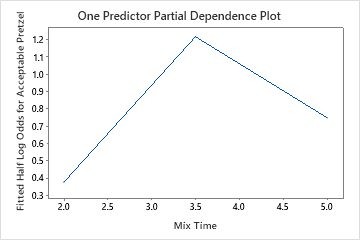

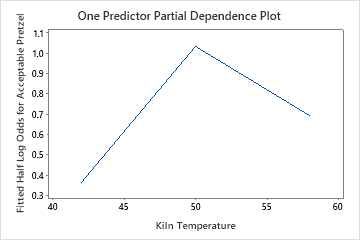

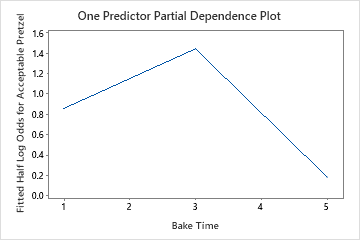

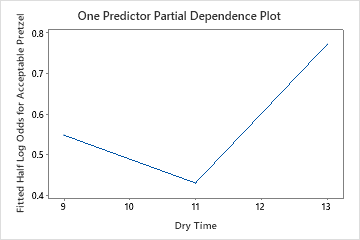

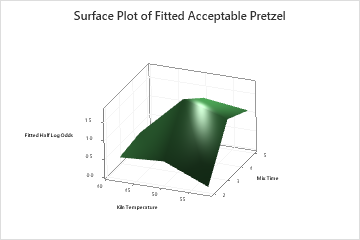

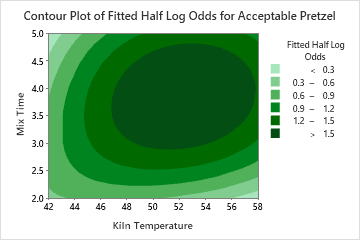

Use the partial dependency plots to gain insight into how the important variables or pairs of variables affect the fitted response values. The fitted response values are on the 1/2 log scale. The partial dependence plots show whether the relationship between the response and a variable is linear, monotonic, or more complex.

The one predictor partial dependence plots show that medium values for Mix Time, Kiln Temperature and Bake Time increase the odds of an acceptable pretzel. A medium value of Dry Time decreases the odds of an acceptable pretzel. The researchers can select to produce plots for other variables.

The two-predictor partial dependence plot of Mix Time and Kiln Temperature shows a more complex relationship between the two variables and the response. While medium values of Mix Time and Kiln Temperature increase the odds of an acceptable pretzel, the plot shows that the best odds occur when both variables are at medium values. The researchers can select to produce plots for other pairs of variables.

Method

| Criterion for selecting optimal number of trees | Maximum loglikelihood |

|---|---|

| Model validation | 70/30% training/test sets |

| Learning rate | 0.05 |

| Subsample selection method | Completely random |

| Subsample fraction | 0.5 |

| Maximum terminal nodes per tree | 6 |

| Minimum terminal node size | 3 |

| Number of predictors selected for node splitting | Total number of predictors = 29 |

| Rows used | 5000 |

Binary Response Information

| Training | Test | ||||

|---|---|---|---|---|---|

| Variable | Class | Count | % | Count | % |

| Acceptable Pretzel | 1 (Event) | 2160 | 61.82 | 943 | 62.62 |

| 0 | 1334 | 38.18 | 563 | 37.38 | |

| All | 3494 | 100.00 | 1506 | 100.00 | |

Model Selection by Eliminating Unimportant Predictors

| Model | Optimal Number of Trees | Average -Loglikelihood | Number of Predictors | Eliminated Predictors |

|---|---|---|---|---|

| 1 | 268 | 0.273936 | 29 | None |

| 2 | 268 | 0.274186 | 27 | Foam Stability, Bulk Density |

| 3 | 234 | 0.273843 | 26 | Least Gelation Concentration |

| 4 | 233 | 0.274350 | 25 | Oven Mode 2 |

| 5 | 232 | 0.274943 | 24 | Kiln Method |

| 6 | 273 | 0.275553 | 23 | Oven Mode 1 |

| 7 | 244 | 0.274811 | 22 | Mix Speed |

| 8 | 268 | 0.274258 | 21 | Oven Mode 3 |

| 9 | 272 | 0.274185 | 20 | Resting Surface |

| 10 | 232 | 0.274077 | 19 | Bake Temperature 3 |

| 11 | 287 | 0.273598 | 18 | Mix Tool |

| 12 | 227 | 0.274358 | 17 | Bake Temperature 1 |

| 13 | 276 | 0.275374 | 16 | Rest Time |

| 14 | 272 | 0.276082 | 15 | Water |

| 15 | 268 | 0.275595 | 14 | Caustic Concentration |

| 16 | 268 | 0.277810 | 13 | Swelling Capacity |

| 17 | 253 | 0.276436 | 12 | Emulsion Stability |

| 18 | 231 | 0.276159 | 11 | Emulsion Activity |

| 19 | 268 | 0.273537 | 10 | Water Absorption Capacity |

| 20 | 260 | 0.273455 | 9 | Oil Absorption Capacity |

| 21 | 299 | 0.272848 | 8 | Flour Protein |

| 22 | 278 | 0.272629 | 7 | Foam Capacity |

| 23* | 299 | 0.267184 | 6 | Flour Size |

| 24 | 297 | 0.288621 | 5 | Bake Temperature 2 |

| 25 | 234 | 0.330342 | 4 | Dry Time |

| 26 | 290 | 0.305993 | 3 | Gelatinization Temperature |

| 27 | 245 | 0.534345 | 2 | Bake Time |

| 28 | 146 | 0.599837 | 1 | Kiln Temperature |

Model Summary

| Total predictors | 6 |

|---|---|

| Important predictors | 6 |

| Number of trees grown | 300 |

| Optimal number of trees | 299 |

| Statistics | Training | Test |

|---|---|---|

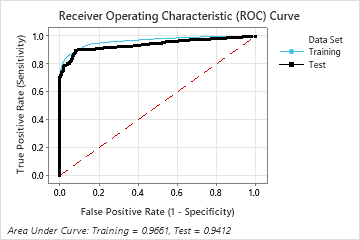

| Average -loglikelihood | 0.2418 | 0.2672 |

| Area under ROC curve | 0.9661 | 0.9412 |

| 95% CI | (0.9608, 0.9713) | (0.9295, 0.9529) |

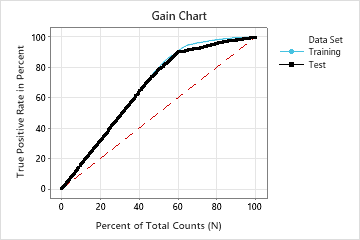

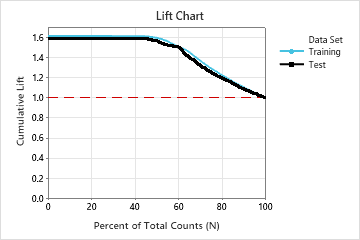

| Lift | 1.6176 | 1.5970 |

| Misclassification rate | 0.0970 | 0.0963 |

Confusion Matrix

| Predicted Class (Training) | Predicted Class (Test) | |||||||

|---|---|---|---|---|---|---|---|---|

| Actual Class | Count | 1 | 0 | % Correct | Count | 1 | 0 | % Correct |

| 1 (Event) | 2160 | 1942 | 218 | 89.91 | 943 | 846 | 97 | 89.71 |

| 0 | 1334 | 121 | 1213 | 90.93 | 563 | 48 | 515 | 91.47 |

| All | 3494 | 2063 | 1431 | 90.30 | 1506 | 894 | 612 | 90.37 |

| Statistics | Training (%) | Test (%) |

|---|---|---|

| True positive rate (sensitivity or power) | 89.91 | 89.71 |

| False positive rate (type I error) | 9.07 | 8.53 |

| False negative rate (type II error) | 10.09 | 10.29 |

| True negative rate (specificity) | 90.93 | 91.47 |

Misclassification

| Training | Test | |||||

|---|---|---|---|---|---|---|

| Actual Class | Count | Misclassed | % Error | Count | Misclassed | % Error |

| 1 (Event) | 2160 | 218 | 10.09 | 943 | 97 | 10.29 |

| 0 | 1334 | 121 | 9.07 | 563 | 48 | 8.53 |

| All | 3494 | 339 | 9.70 | 1506 | 145 | 9.63 |