What is sum of squares?

The sum of squares represents a measure of variation or deviation from the mean. It is calculated as a summation of the squares of the differences from the mean. The calculation of the total sum of squares considers both the sum of squares from the factors and from randomness or error.

Sum of squares in ANOVA

In analysis of variance (ANOVA), the total sum of squares helps express the total variation that can be attributed to various factors. For example, you do an experiment to test the effectiveness of three laundry detergents.

The total sum of squares = treatment sum of squares (SST) + sum of squares of the residual error (SSE)

The treatment sum of squares is the variation attributed to, or in this case between, the laundry detergents. The sum of squares of the residual error is the variation attributed to the error.

Converting the sum of squares into mean squares by dividing by the degrees of freedom lets you compare these ratios and determine whether there is a significant difference due to detergent. The larger this ratio is, the more the treatments affect the outcome.

Sum of squares in regression

In regression, the total sum of squares helps express the total variation of the y's. For example, you collect data to determine a model explaining overall sales as a function of your advertising budget.

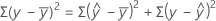

The total sum of squares = regression sum of squares (SSR) + sum of squares of the residual error (SSE)

The regression sum of squares is the variation attributed to the relationship between the x's and y's, or in this case between the advertising budget and your sales. The sum of squares of the residual error is the variation attributed to the error.

By comparing the regression sum of squares to the total sum of squares, you determine the proportion of the total variation that is explained by the regression model (R2, the coefficient of determination). The larger this value is, the better the relationship explaining sales as a function of advertising budget.

Comparison of sequential sums of squares and adjusted sums of squares

- Sequential sums of squares

-

Sequential sums of squares depend on the order the factors are entered into the model. It is the unique portion of SS Regression explained by a factor, given any previously entered factors.

For example, if you have a model with three factors, X1, X2, and X3, the sequential sums of squares for X2 shows how much of the remaining variation X2 explains, given that X1 is already in the model. To obtain a different sequence of factors, repeat the regression procedure entering the factors in a different order.

- Adjusted sums of squares

-

Adjusted sums of squares does not depend on the order the factors are entered into the model. It is the unique portion of SS Regression explained by a factor, given all other factors in the model, regardless of the order they were entered into the model.

For example, if you have a model with three factors, X1, X2, and X3, the adjusted sum of squares for X2 shows how much of the remaining variation X2 explains, given that X1 and X3 are also in the model.

When will the sequential and adjusted sums of squares be the same?

The sequential and adjusted sums of squares are always the same for the last term in the model. For example, if your model contains the terms A, B, and C (in that order), then both sums of squares for C represent the reduction in the sum of squares of the residual error that occurs when C is added to a model containing both A and B.

The sequential and adjusted sums of squares will be the same for all terms if the design matrix is orthogonal. The most common case where this occurs is with factorial and fractional factorial designs (with no covariates) when analyzed in coded units. In these designs, the columns in the design matrix for all main effects and interactions are orthogonal to each other. Plackett-Burman designs have orthogonal columns for main effects (usually the only terms in the model) but interactions terms, if any, may be partially confounded with other terms (that is, not orthogonal). In response surface designs, the columns for squared terms are not orthogonal to each other.

For any design, if the design matrix is in uncoded units then there may be columns that are not orthogonal unless the factor levels are still centered at zero.

Can the adjusted sums of squares be less than, equal to, or greater than the sequential sums of squares?

The adjusted sums of squares can be less than, equal to, or greater than the sequential sums of squares.

Suppose you fit a model with terms A, B, C, and A*B. Let SS (A,B,C, A*B) be the sum of squares when A, B, C, and A*B are in the model. Let SS (A, B, C) be the sum of squares when A, B, and C are included in the model. Then, the adjusted sum of squares for A*B, is:

SS(A, B, C, A*B) - SS(A, B, C)

However, with the same terms A, B, C, A*B in the model, the sequential sums of squares for A*B depends on the order the terms are specified in the model.

Using similar notation, if the order is A, B, A*B, C, then the sequential sums of squares for A*B is:

SS(A, B, A*B) - SS(A, B)

- SS(A, B, C, A*B) - SS(A, B, C) < SS(A, B, A*B) - SS(A, B), or

- SS(A, B, C, A*B) - SS(A, B, C) = SS(A, B, A*B) - SS(A, B), or

- SS(A, B, C, A*B) - SS(A, B, C) > SS(A, B, A*B) - SS(A, B)

What is uncorrected sum of squares?

Squares each value in the column, and calculates the sum of those squared values. That is, if the column contains x1, x2, ... , xn, then sum of squares calculates (x12 + x22+ ... + xn2). Unlike the corrected sum of squares, the uncorrected sum of squares includes error. The data values are squared without first subtracting the mean.

In Minitab, you can use descriptive statistics to display the uncorrected sum of squares. You can also use the sum of squares (SSQ) function in the Calculator to calculate the uncorrected sum of squares for a column or row. For example, you are calculating a formula manually and you want to obtain the sum of the squares for a set of response (y) variables.

In the calculator, enter the expression: SSQ (C1)

Store the results in C2 to see the sum of the squares, uncorrected. The following worksheet shows the results from using the calculator to calculate the sum of squares of column y.

| C1 | C2 |

|---|---|

| y | Sum of Squares |

| 2.40 | 41.5304 |

| 4.60 | |

| 2.50 | |

| 1.60 | |

| 2.20 | |

| 0.98 |

Note

Minitab omits missing values from the calculation of this function.